1️⃣ Where to test bots

💬 Chatbot

Test window: https://<company-name>.bot.coworkers.ai/#/<instance-name>

Admin URL: https://<company-name>.bot.coworkers.ai/admin/#/<instance-name>

For quick access to the chat testing page, open any instance and click the text bubble 💬 icon in the top-right corner. Then, select the instance you want to test.

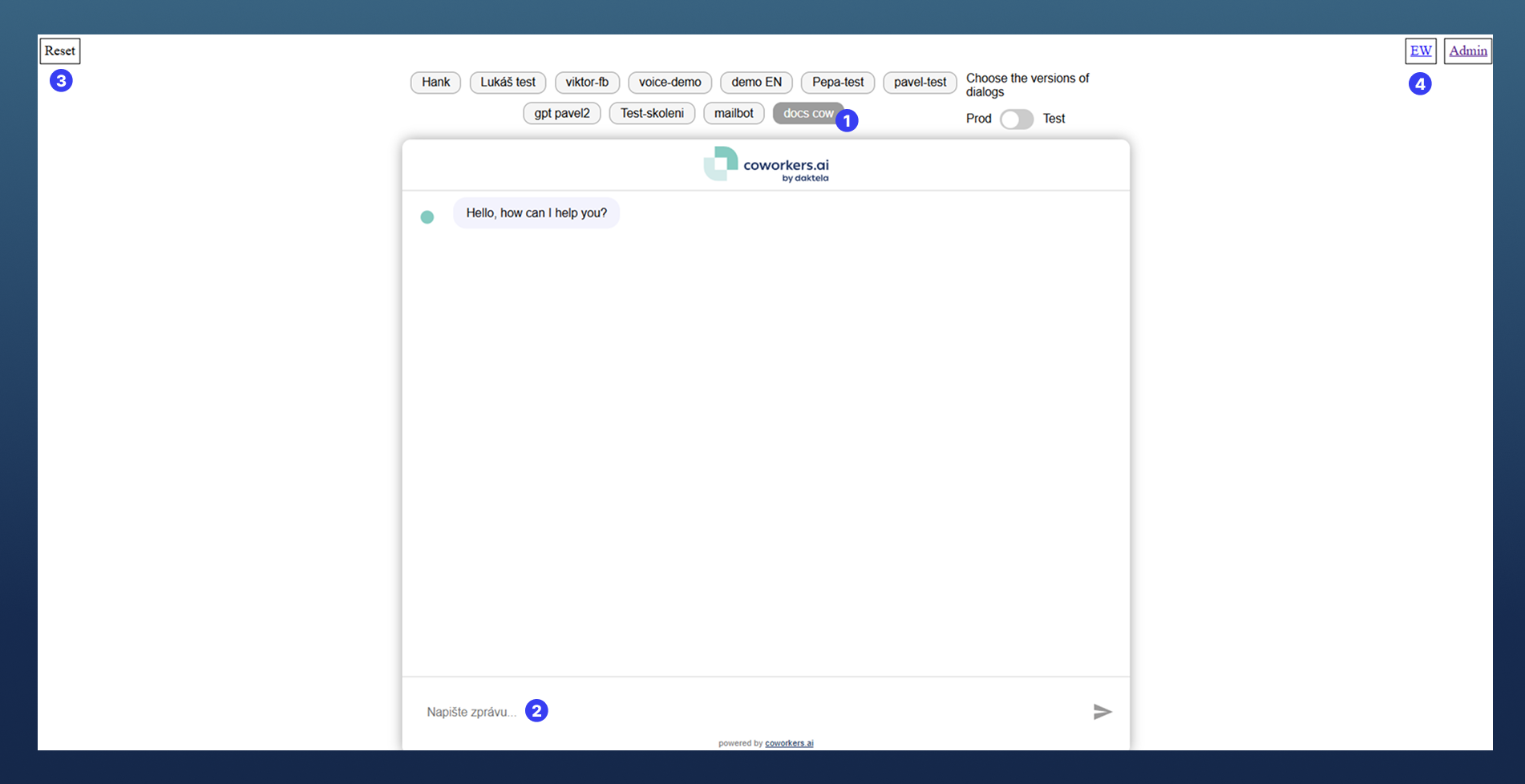

👉 What you can find in the test window:

-

Instance selector (1️⃣) – Located at the top. Use it to switch to the specific bot instance you want to test.

-

Chat window (2️⃣) – Type your questions here and see the bot’s replies in real time.

-

Reset button (3️⃣) – Clears the current conversation so you can start a new one.

-

EW button (4️⃣) – Located in the top-right corner. Opens the documentation page with the script for deploying the chatbot on a website or in an application. On this page, you can also preview how the chat window will look in real use.

📞 Voicebot

Each voicebot has a dedicated phone number.

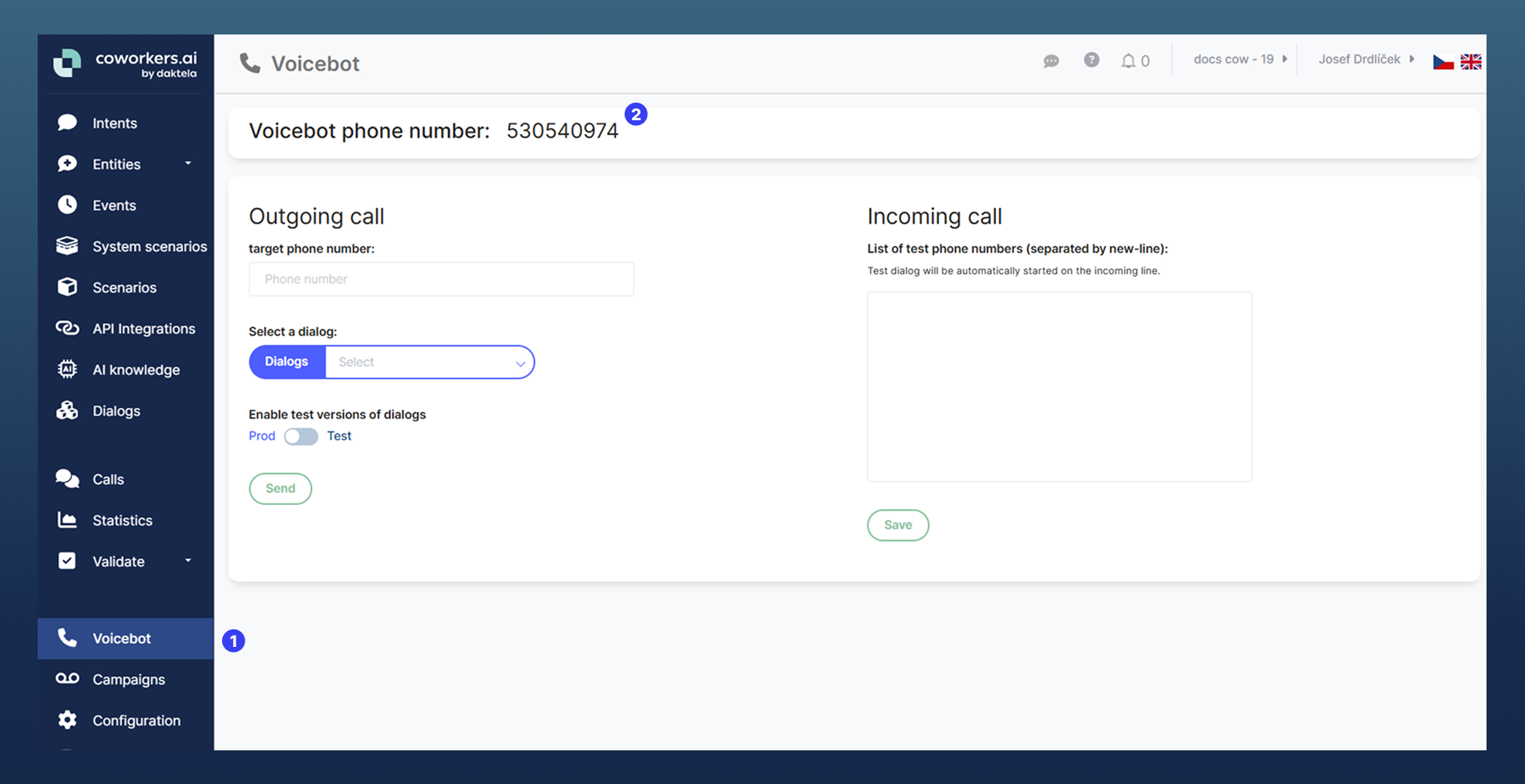

You can find it in the Voicebot section of the admin panel:

-

In the left menu, click on "Voicebot" (1️⃣).

-

The test phone number is displayed at the top of the page under "Voicebot phone number" (2️⃣).

-

Admin URL: https://<company-name>.bot.coworkers.ai/admin/#/<instance-name>

For quick testing of dialog flow and logic, voicebots can be tested the same way as chatbots — via text in the testing chat window. However, always make sure to test your voicebots via real calls as well. This ensures an accurate simulation of the user experience and verifies voice-specific functions.

✉️ Emailbot

-

Used for selected email queues and categories (in Daktela) as defined in the project scope.

Note: this testing applies only to Daktela-connected emailbots.

Emailbots for O365 or Outlook are tested separately in their respective environments. -

Admin URL: https://<client>.bot.coworkers.ai/admin/#/<instance-name>

2️⃣ What to test – types of answers

✅ Dynamic answers

-

The bot searches for answers in documents, tables, or websites.

-

The quality of the answer is as good as the quality of the sources you provided. If certain information is missing in the sources, the bot cannot invent it.

-

Also see: 🧠 AI Knowledge

🔄 Integrated answers

-

The bot retrieves information by calling another system (API), such as order status or shipment tracking.

-

The bot always answers based solely on the data returned from your system:

-

If the API response does not contain a tracking link, the bot cannot include it in the answer.

-

If the API returns status "new", the bot cannot use text meant for the status "in progress".

-

-

The logic is simple: whatever the API returns → that’s what the bot uses in the answer.

-

Also see: 💻 API Integrations

📧 Static answers

-

The bot uses predefined texts or templates, selected according to the type of question (intent).

-

These responses are fixed and always the same for the given situation. They are typically created without using a generative LLM — you define both the logic and the replies yourself.

-

If you rely on static answers and haven’t accounted for a particular situation in the dialog flow (for example, if the bot asks for an order number but the customer replies “Where can I find the order number?”), the bot won’t be able to respond adequately unless this situation and the corresponding reply are defined in the flow.

3️⃣ How to test step-by-step

👀 Step 1: Find the record

🅰️ Chatbot and Voicebot

-

Open the Discussions / Calls section (depending on what you are testing). Learn more here: 4 How to find discussions (calls, chats, e-mails)

🅱️ Emailbot

-

Emailbot testing is performed directly in Daktela.

-

For tickets that meet the processing conditions, you will see the "Emailbot" section.

👉 In the Emailbot section, fill in:

-

High level - Main category of the query (e.g., "order")

-

Low level - Subcategory of the query (e.g., "status")

-

Emailbot feedback - Select OK (correct) or NOK (incorrect)

-

Feedback - Describe what was wrong and how it should be corrected

-

Emailbot discussion URL - Link to the discussion in the emailbot application

✅ Step 2: Evaluate the answer

-

Select ✅OK (correct), ❌NOK (incorrect), or OK – marked.

-

OK - The answer was correct.

-

NOK - The answer was incorrect.

-

OK – marked - The answer was correct, but something should be adjusted (such as wording or style).

-

💬 Step 3: Write a comment (for NOK or OK – marked)

-

In the "Feedback" field, write what was wrong and how it should be corrected.

-

Example: "The bot wrote 'Not processed', but it should have been 'Shipped'."

4️⃣ What to evaluate

👉 Main rule: Only evaluate whether the bot behaved according to the agreed scope.

Do not evaluate what the bot "could have said" or what "would be nice". The bot can only do what was agreed in the scope.

✅ If the answer was correct → mark OK.

❌ If the answer was incorrect → mark NOK and add a comment:

-

what the correct answer should have been

-

why the current answer was wrong

📌 How to write feedback

-

Describe the error clearly and specifically.

-

Do not evaluate topics outside the scope (the bot does not read minds). You can mark such cases as "For development" and later they can be added to the scenario.

-

If you test the same failing case 10 times, it is statistically just 1 NOK – 10× the same error is still one error.

📌 Example of bad feedback

Client asks: "What are the opening hours of the branch on Svoboďák?"

Bot answers: "Monday to Friday from 9 am to 6 pm."

Feedback: "The bot did not provide contact details."

➡️ This is not evaluated as an error – the customer did not ask for contact details, so it is not the bot’s mistake.

📊 Evaluation

-

Bot success rate is measured by the number of OK vs. NOK in the Discussions section / for Emailbot directly in Daktela.

-

Keep in mind: there is no 100% perfect bot – there will always be some questions that will not work ideally.

-

That is why we recommend having fallback scenarios (e.g., transfer to an operator) so that the customer is not left without help.

Watch out for TTS and STT (voicebot only)

👉 What it means:

-

TTS (Text to Speech) = the text is spoken aloud by a robotic voice.

-

STT (Speech to Text) = your voice is transcribed into text, which the bot then processes.

➡️ The bot never hears your voice directly. It only works with the transcript. What you say may not be exactly what the bot sees.

🎤 What affects STT:

-

Background noise (office noise, other people talking)

-

Fast / unclear speech (mumbling, swallowing words)

-

Uncommon names and foreign words (mis-transcription)

-

Strong accent

-

Poor signal or low-quality microphone

📌 Example 1 – Name:

You say: "My name is Xénie Czibereová."

STT writes: "my name is sénie čiberová."

➡️ The bot searches in the database for "sénie čiberová". No match found because of incorrect transcription → the data cannot be extracted.

📌 Example 2 – Address:

You say: "I live in Brno, Stamicova street 5"

STT writes: "I live in brno, stát micova five."

➡️ The bot searches in the database. Street does not match → the address cannot be extracted.

📝 Testing summary:

-

If the bot does not recognize a name or address, usually it is not the bot’s logic but an STT transcription error.

-

STT is a third-party technology (Google, Microsoft, etc.). We always use the best available on the market, but it will never be 100%.

-

It is like dictating an SMS on your phone – usually it works, but sometimes it writes the name or number incorrectly.

What we learned

Now you know where to test the bot, how to evaluate answers, and how to write feedback in a way that developers can easily understand and fix the issues.