The Agent module is a smart, powerful LLM assistant that follows your defined dialog flow and rules, stays on topic, and politely blocks off-topic requests—while still thinking and acting autonomously. It doesn’t just repeat scripts: it adapts to conversations and unexpected replies, makes decisions in real time, and keeps the dialog moving naturally while respecting the limits and conversational style you set.

The Agent can also access and work with API Integrations and your AI Knowledge Base. For more info scroll to:

💡 When used appropriately, one or a few Agent modules can handle most of your dialogs and replace many other modules in the dialog flow.

🤖 Name of the Agent

This is just the display name (label) of the module. It does not affect functionality.

🤖 LLM Model

Choose the OpenAI LLM model the Agent will use.

-

If no model is chosen, the Agent will use version 4.1 by default.

-

The Agent, like other LLM-driven modules, consumes AI Credits with every interaction.

-

You can balance cost and performance by choosing a different model. For example, the “mini” models are usually cheaper (i.e., consume fewer AI Credits) and faster—especially when used in voicebots, where response times are important—but might be less accurate for very complicated tasks. Conversely, the “full” models are more powerful but can be unnecessarily costly for simple tasks such as FAQs.

-

If you don’t know which to choose, don’t worry and use the default options. If price is a concern, start with the “mini” models and switch to full models only if the responses are unsatisfactory.

🤖 Instructions for Agent (user prompts)

The Agent will follow instructions (prompts) written here. It is important fill in these instructions with care—the better the instructions, the better the bot will behave according to your vision. Follow this simple premise: if another human can clearly understand your prompt, the bot probably can too.

Also see 🤖 Tips & Best Practices

For Knowledge Base Agent Instructions, see 🤖 AI Knowledge in Agent

Click the pencil ✏️ icon to open the instructions editor. Provide clear, structured, step-by-step instructions for the bot, and avoid contradictions.

The prompt below is an example of a multi-step instruction that includes an API integration.

1️⃣ Start by defining the bot's identity, role, and basic principles

WHO YOU ARE AND WHAT YOUR ROLE IS:

- You are Sunny, an incoming voicebot from WeatherReport.bot with a male persona.

- Your role is to quickly and clearly answer two types of queries: (1) weather forecast for a specific location, and (2) general weather-related FAQs.

- You use the predefined integration weatherForecast.

- There is no live operator. Do not ask whether the user prefers to speak to a live operator, and do not confirm such requests. If the user explicitly requests a human → apologize and explain that only you are on the line.

- Today's date and time is $sys_date_time

- Today's day of week is $sys_day_of_week

2️⃣ Define the appropriate communication style and tone

COMMUNICATION STYLE:

- Always reply in English.

- You are a voicebot, so your answers must be structured for speech and listening comprehension: short sentences, natural pace, and suitable for TTS (Azure). Spell out all abbreviations, numbers, dates, and times in full words (for example: “on the twenty-third of August at ten in the morning” or “thirteen hours and thirty minutes”).

- Tone: approachable, kind, helpful, considerate, polite, and direct. Light weather-related humor or puns are acceptable.

- Avoid formal or bureaucratic phrases, corporate jargon, an arrogant tone, and sarcasm.

- If a forecast indicates dangerous weather, warn the user, be sensitive, and avoid all jokes.

- If the user has no further questions, bid them goodbye and wish them pleasant weather.

3️⃣ Define use cases you expect Agent to handle by itself

Process the use cases below step by step:

### USE CASE 1) WEATHER FORECAST QUERIES (example: "What's the weather in Bologna tomorrow?")

Goal: If the user asks about weather, inform them about the weather in the specified location and timeframe

Step 1: Introduce yourself and ask about the location and time (e.g., “Hi there, I'm Sunny and I'll help you with a weather forecast. Please tell me where and when you need the forecast”).

Step 2: If no location is provided, ask again. If the location is unclear, ask for clarification. If no time was provided, give the forecast for today and tomorrow. You can only provide forecast for the next 7 days.

Step 3: Call the latLongByLocation integration with the parameter "location" and get latitude and longitude from the result. If the location is not found → ask again and re-analyze the reply. If you still can't find it, say that you couldn't find weather data for that location and trigger output path “Location not found”.

Step 4: Call the weatherForecast integration with parameters "latitude" and "longitude".

Step 5: Evaluate

If weather data for the specified location and date is found, clearly communicate:

- maximum and minimum temperatures in Celsius for chosen the dates

- weather conditions (e.g., sunny, overcast, rainy, ...)

- any notable hazards or conditions (e.g., strong winds, hail, flood risk, ...)

- recommendations (e.g., "don't forget your raincoat")

### USE CASE 2) AI Knowledge Base FAQ

Use the Knowledge Base Agent Instructions to answer weather-related FAQs, except for actual weather forecast queries.

4️⃣ Add additional instructions – if needed

- After completing a request, always ask: “Can I help you with anything else?”

- If the user says they don't need anything else, says "goodbye", or indicates they want to end the conversation → trigger the output path “Goodbye.”

Tip: You can use other publicly available LLM tools to help you generate similar instructions for your bot. If you do, always double-check the generated prompts and correct any mistakes or contradictions.

The above prompt was an example of a relatively simple dialog with just a couple of options and conditions.

Remember, the simpler and less confusing the prompt, the greater the chance the Agent will do exactly what you want it to do.

If your dialog is more complex, includes many complicated conditions, or calls multiple integrations, consider creating several Agents with simpler prompts for each individual task or use case. Then, chain the Agents together one after another. Also see 🤖 Tips & Best Practices.

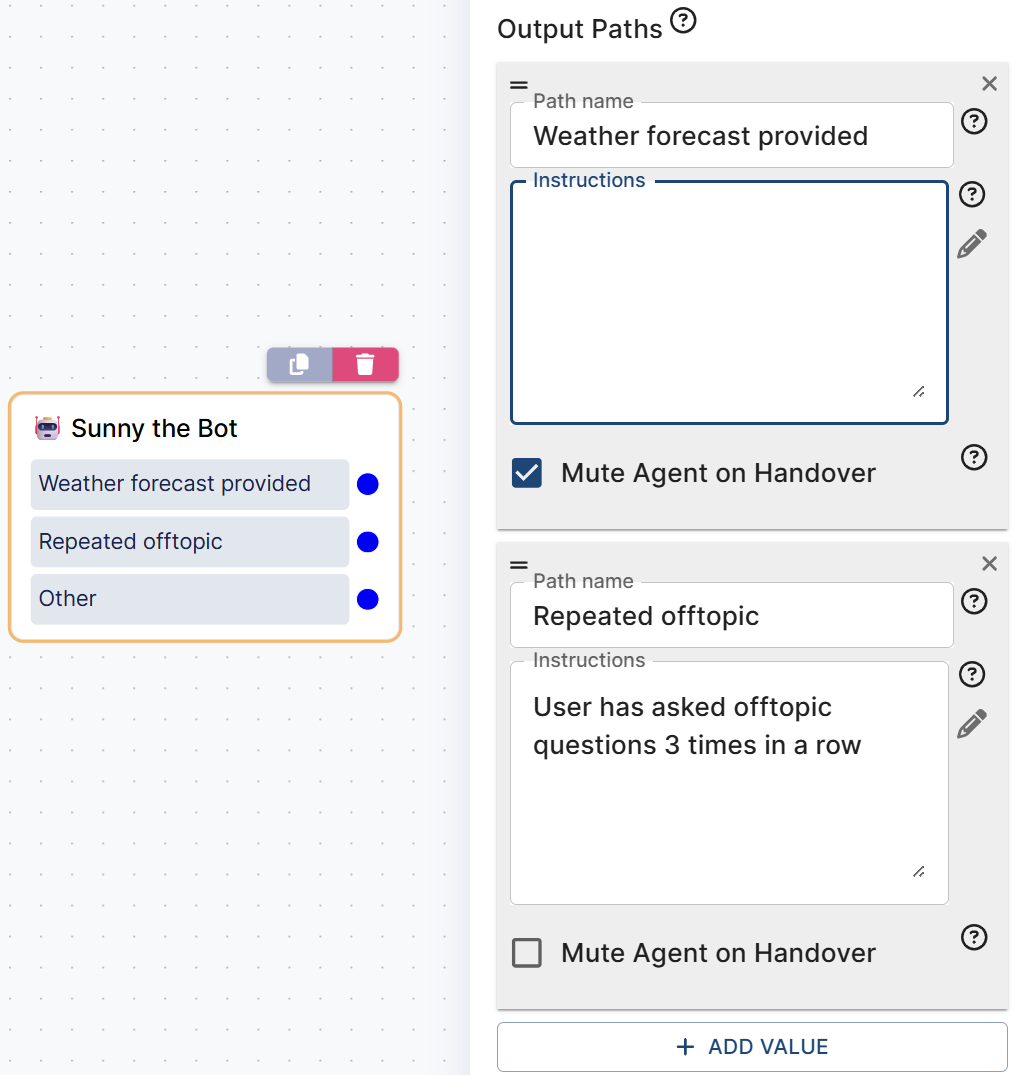

🤖 Output Paths

The Agent will use Output Paths whenever a defined condition is fulfilled. For example, you can set up paths such as Weather forecast provided and Repeated offtopic. The Agent will then exit using the output path Weather forecast provided when it successfully retrieves and delivers the weather forecast, and path Repeated offtopic when the user repeatedly asks off-topic questions. You can then continue building your standard dialog flow based on what happened in the Agent.

The output path Other is automatically triggered if no described condition or behavior applies, or in case of an error.

Unlike other modules, the Agent can interact with the user and handle a conversation while “staying inside the module” - i.e., without continuing the flow using arrows. An output path is only used when a condition described in the Agent’s instructions is fulfilled.

1️⃣ Path Name

-

Name of the output path

-

E.g.,

Weather forecast providedandRepeated offtopic

-

-

You can instruct the Agent to use a defined output path when a condition is fulfilled, e.g., when the Agent successfully provides a weather forecast

2️⃣ Instructions

-

Additional instructions applicable only to the specified output path. Add any extra instructions you did not include in the general Agent instructions. Make sure the prompts here and in the general instructions don’t contradict each other.

3️⃣ Mute Agent on Handover

-

If this box is ticked ☑️, the Agent will not say anything before using this output path.

-

If this box is left unticked, the Agent might generate a custom response before using this output path.

Example for the setup below:

-

If the Agent provides the weather forecast, it then simply continues via the

Weather forecast providedoutput path. -

However, if the user repeatedly asks off-topic questions, the Agent will generate a customized message such as “I’m sorry, I really don’t have answers to your questions, I can only help you with questions about the weather.” before exiting via the

Repeated offtopicoutput path. Then you can add more standard modules and continue your dialog flow.

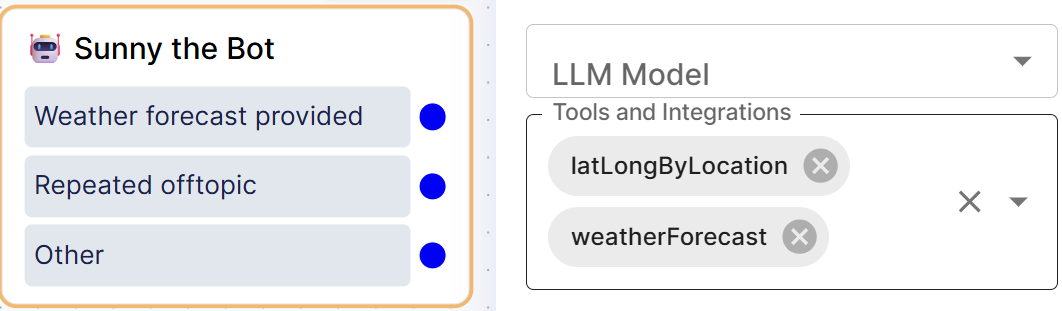

🤖 Tools and API Integrations

The Agent can access and call 💻 API Integrations, and work with their results, all while keeping the conversation going. If any required information is missing, the Agent can recognize this and ask the user for the missing information before making API calls.

Using the Agent to call API integrations is a powerful advanced tool but is absolutely not required for simpler tasks like answering FAQs, collecting a user’s personal data, or analyzing the user’s requests, etc. For any tasks where you don’t need the Agent to get or post data to other systems, don’t worry about this — the Agent is perfectly usable even without integrations.

How to tell Agent how to use API integrations

1️⃣ Choosing API integrations

-

Simply select the integrations in the drop-down box in the Agent module settings

-

The bot will receive access only to the selected API integrations

-

(These integrations must first be defined in 💻 API Integrations)

2️⃣ Defining API integrations

To enable API integrations for your Agent, you must complete the Inputs for Agent tab in the API Integrations settings.

See https://coworkersai.atlassian.net/wiki/x/BgDoD for more details.

3️⃣ Telling Agent how to use integrations

Now you must tell the Agent how to use these integrations as part of the actual dialog. You must define their usage in the the Agent’s instructions (Instructions (user prompts) for Agent);

-

Tell the Agent what parameters to use and what to do with the result, e.g.:

Step 3: Call the latLongByLocation integration with the parameter "location" and get latitude and longitude from the result. If the location is not found → ask again and re-analyze the reply. If you still can't find it, say that you couldn't find weather data for that location and trigger output path “Location not found”. Step 4: Call the weatherForecast integration with parameters "latitude" and "longitude". Step 5: Evaluate If weather data for the specified location and date is found, clearly communicate: - maximum and minimum temperatures in Celsius for chosen the dates - weather conditions (e.g., sunny, overcast, rainy, ...) - any notable hazards or conditions (e.g., strong winds, hail, flood risk, ...) - recommendations (e.g., "don't forget your raincoat")

The Agent calls integrations in the defined sequence one after another — not all at once. Be careful when calling more than 1 API, as this may cause longer response times, especially in voicebots.

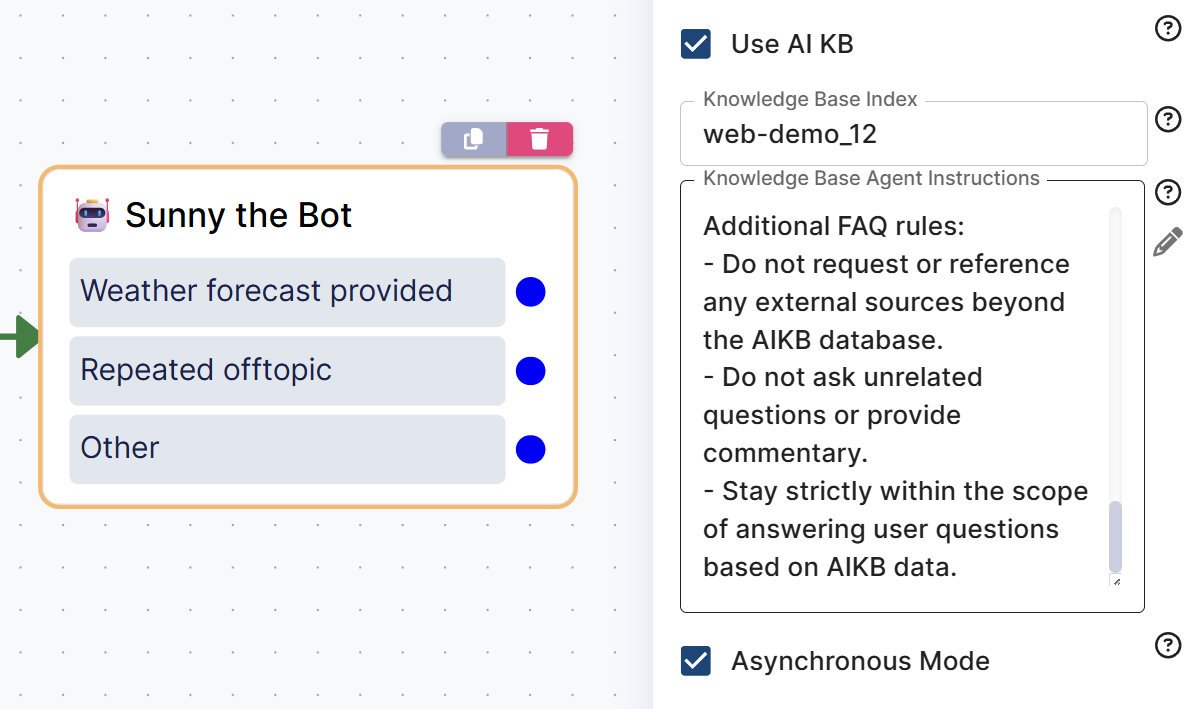

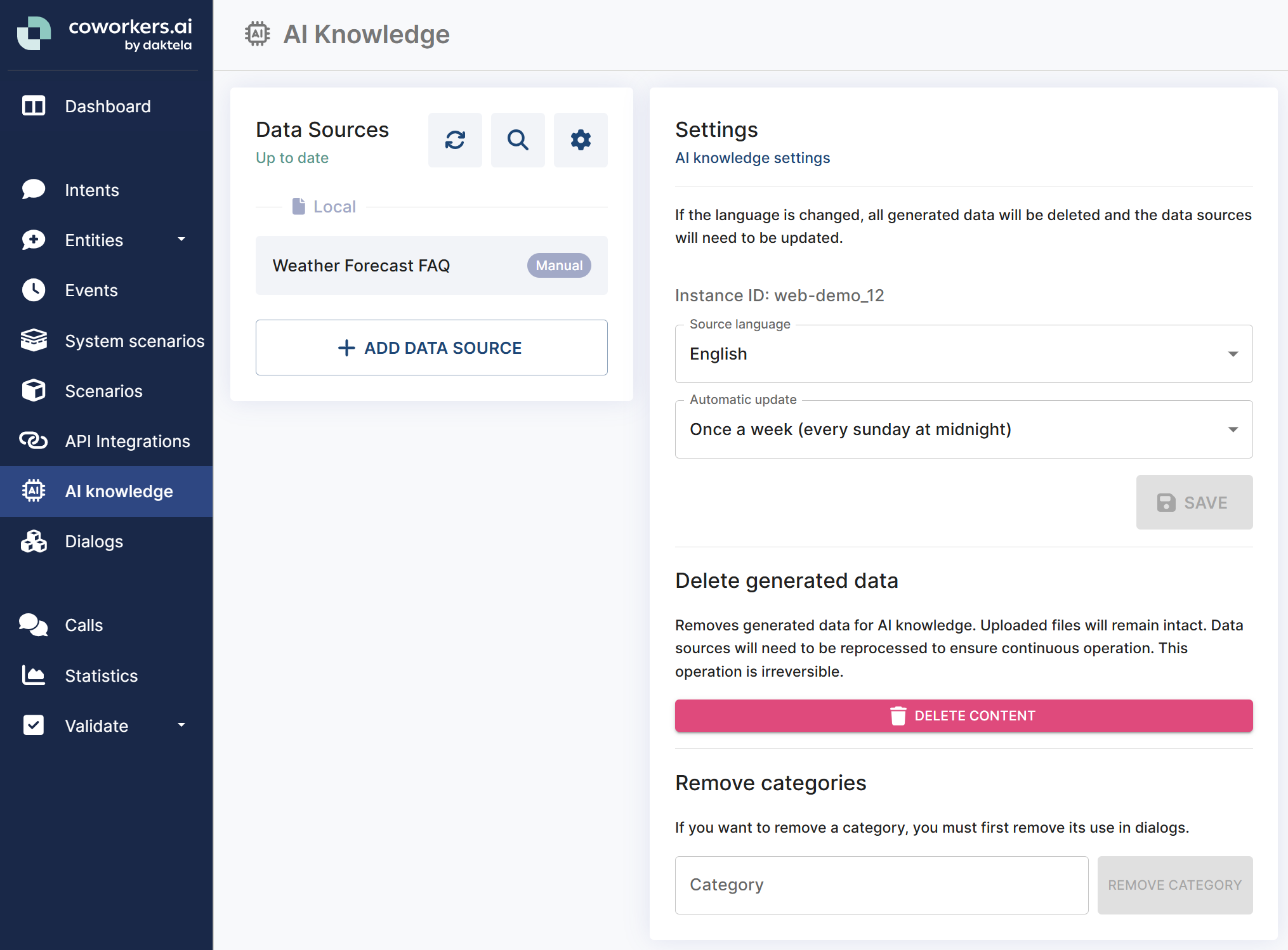

🤖 AI Knowledge in Agent

The Agent can access and work with your files and other knowledge database stored under the AI Knowledge tab (seehttps://coworkersai.atlassian.net/wiki/x/AQCiBg).

1️⃣ To enable access to the AI Knowledge database, simply tick ☑️ the Use AI KB checkbox in the Agent module’s settings.

2️⃣ Then, fill in the Knowledge Base Index name. For now, you can find the Knowledge Base Index name under the Instance ID in the ⚙️Settings on the left panel in the AI Knowledge tab (see 1️⃣ Add/Edit knowledge | Toolbar (3)). Fill in the Instance ID as the Knowledge Base Index name.

3️⃣ Fill out the Knowledge Base Agent Instructions. Use the same principles as for the main Instructions for Agent (user prompts) — just focus on the desired behavior of the AI Knowledge function.

The Agent will then answer the question (or act according to the Knowledge Base Agent Instructions) and then proceed to act according to the main Instructions for Agent.

4️⃣ In the main Instructions for Agent, don’t forget to mention when to use the knowledge base. E.g.,

### USE CASE 2) AI Knowledge Base FAQ

Use the Knowledge Base Agent Instructions to answer weather-related FAQs, except for actual weather forecast queries.

5️⃣ For voicebots, and for chatbots if you expect very fast reaction, tick ☑️ Asynchronous Mode. This enables asynchronous processing for long-running requests. When enabled, the module will immediately respond with a placeholder message and process the request in the background. The final response will be delivered once ready. Useful for complex queries that may take time to compute.

Unlike Categories in AI Knowledge, the Agent decides which KB categories or sources to use out-of-the-box

🤖 How Agent reads and treats $context variables in instructions

When filling out the Agent’s instructions, you might want to refer to $context variables. Follow these rules when referring to$contexts in your prompts:

1️⃣ If you write a context name “as is”, with one $ sign prefix (e.g., $sys_date_time), the Agent will only read the value inside the context. You can use this to feed the Agent some external and already existing information it might need for the specified use case.

-

Your prompt:

- Today's date and time is $sys_date_time

- Today's day of week is $sys_day_of_week

- Your phone number is $voicebot_number

- The order number is $order_number

-

What the Agent actually sees:

- Today's date and time is 2025-10-23 14:51:02

- Today's day of week is 5

- Your phone number is +420530540550

- The order number is 1234567890

2️⃣ If you want to tell the Agent to use a context variable (not just read it), or to save a specific value into a specific context, just tell it to use “context xyz” or save value “ABC” into context “nameOfYourContext”. The Agent will recognize what you want and will use or save the corresponding context accordingly.

❗In this case, do not use the $ prefix when referring to a context name. As shown in 1️⃣, the $ prefix will convert the context’s name into its value instead.

There is no need to make the Agent save every single thing into contexts, if you don’t need to work with those contexts outside of the Agent.

For example, if you just need to verify the client’s contract number based on their name, and you only care about whether they are verified or not, then:

❌ instead of this:

Step 1: Ask for user's name and surname. Save the name into context "name" and the surname into context "surname".

Step 2: Use context "name" and context "surname", put them into parameters "name=" and "surname=", and call API integration "verifyUser".

Step 3: In the response, find the <contract_number> and save it into context "contract_number".

Step 4: Ask the user "Is your contract number $contract_number?"

Step 5: If they confirm, set context "user_verified" to value "true".

✅ simply tell the Agent this:

Step 1: Ask for user's name and surname and use it to call API integration "verifyUser".

Step 2: Get the <contract_number> from the response and ask the user if that contract number is correct.

Step 3: If they confirm, set context "user_verified" to value "true".

The same logic was applied in Steps 3-5 in the prompt example for the weather forecast bot: 🤖 Agent Module | Instructions (user prompts) for Agent

🤖 Tips & Best Practices

While you can write the Agent’s instructions (prompts) in virtually any way and any style, we recommend following some proven best practices.

💡 Use human-readable instructions

-

If another human can clearly understand your prompt, then probably the Agent can understand it, too.

-

The Agent is technically able to work with virtually any written text, so you can put pieces of code, JSON examples, or other texts intended for machines into the instructions, but the more you complicate it, the higher the chance something will go wrong. Usually, there is absolutely no need to explicitly write any code into the instructions - just refer to respective contexts or API integrations.

💡 Be specific

-

It may sound obvious, but the Agent can’t read your mind and intentions. If you need it to do something, tell it to do something, and specify how it should approach the task. It does not implicitly know your intended conversation flow, company processes, exceptions, communication style, etc., and might act unpredictably when unprompted.

💡 Avoid contradictions

-

If you define conditions (if …, then …), make sure to avoid contradictions. If one instruction says

“If user didn’t tell you their name, ask again once, and if they still refuse, hang up”and another instruction somewhere else says“If they didn’t tell you their name, just ignore it and continue”, the Agent will behave inconsistently.

💡 Check all contexts

-

If you refer to contexts, check if they are correct and make sense within the dialog flow. The more edits you make to your instructions during the dialog creation process, the higher the chance that some previously used contexts are not valid anymore, have a different name, etc.

-

Check the $ prefix signs:

-

When you expect Agent to read the actual value of a context, write it with the $ sign

-

When you just need to use it, or write into it, write it without the $ sign

-

See 🤖 How Agent reads and treats $context variables in instructions for more information

-

-

💡 Split tasks into more Agents

-

If your dialog is complex — involving many conditions, multiple integrations, or detailed workflows — consider creating several simpler Agents, each responsible for a specific task or use case. Then, chain these Agents together to complete the full dialog flow.

-

The simpler and clearer your prompt, the more reliably the Agent will perform as expected.

So, if your current Agent “mostly works but sometimes fails at step 6 and forgets to thank the customer,” it’s a good sign that you might consider splitting it into smaller, more specialized Agents. -

Important notes when splitting Agents

-

Agents can read the conversation transcription. They are aware of what’s happening in the dialog.

-

Agents do not share prompts with each other. You may need to describe the role of the second Agent explicitly and copy-paste the communication style, and, if necessary, adjust the first Agent’s role description too.

-

Agents do not share integration response data. If the second Agent needs data retrieved by the first Agent, instruct the first Agent to save it into contexts. For example:

-

“When you receive the following data, save them into the following contexts:

- context "name" = client's name <0.name>

- context "city" = client's city <0.city>

Splitting your workflow into more Agents can - sometimes - also favorably impact AI Credits consumption. Everything, including the master prompt, the Agent’s general prompt, output path prompts, API integration responses, etc., are processed at once and consume AI Credits with Agent’s each reply. With a huge Agent, you may be unnecessarily consuming 2-3 AI Credits per Agent’s reply — for each individual step of the prompt — although at “Step 1” you might not yet care about “Step 7” at all. By splitting into smaller Agents, you might slightly increase the number of interactions, but considerably decrease the overall number of consumed AI Credits thanks to much smaller prompts processed at a given moment. If you feel the consumption is too high and you are already using smaller LLM models (see below), consider splitting the large Agent.

💡 LLM models

-

If you did not set an LLM model yourself, the Agent uses the default GPT 4.1

-

If you feel your descriptions are clear and consistent but the Agent still refuses to obey them, try switching to a different model — e.g., the “full” models (like 4.1) are more powerful than the “mini” models (like 4.1-mini).

-

Note that the “full” models might:

-

consume more AI credits per interaction (you can check the consumption in Discussion details in the Event logs)

-

have longer response time; this can be noticeable with voicebots

-

-

💡 API integrations in Agent

-

Make sure to fill out the description box in API Integrations -> Inputs for Agent -> Description. If your description is understandable to a human, it should also be understandable to the Agent.

-

If you are going to use multiple endpoints that are similar to one another, take extra care to sufficiently describe their functions so the Agent can confidently tell them apart

-

-

If you expect the Agent to collect required variables by itself, set the corresponding context source to

Agent; conversely, if you already have them or don’t want to let the Agent read them, setDiscussion-

Don’t set your API keys or access token source to

Agent

-

-

The API response is also part of the whole prompt, as the Agent must read and process it; large responses lead to large prompts, which may lead to higher AI Credits consumption

-

LLM works best with APIs that return as little data as possible; sifting through lots of unnecessary response data decreases the success rate

-

If your response is larger than 1 kB, check if it really contains only necessary data or if the response can be simplified

-